Difference between revisions of "Niryo Ned One - Townend Joseph"

m (→Further developments added) |

m (→ROS code) |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 13: | Line 13: | ||

==Conclusion== | ==Conclusion== | ||

| − | In conclusion, this project was successful at the task of sorting Lego bricks by their colour. | + | In conclusion, this project was successful at the task of sorting Lego bricks by their colour. It could identify the colours on a regular basis, delivering the bricks into the predefined locations that they belonged. It all happened at a sufficient speed to ensure the automisation could be maintained as a viable alternative than human work. |

==Further developments== | ==Further developments== | ||

| Line 40: | Line 40: | ||

Added to this project is one of the proposed edits to the project that I have recently added. It comprises of a Dobot taking items off of a conveyor belt that stops when a UV sensor is disrupted. It then takes the blocks and checks the colour and sorts them. The code was also published in ROS programming language | Added to this project is one of the proposed edits to the project that I have recently added. It comprises of a Dobot taking items off of a conveyor belt that stops when a UV sensor is disrupted. It then takes the blocks and checks the colour and sorts them. The code was also published in ROS programming language | ||

| − | <youtube>6DXUZQQkKpg< | + | <youtube>6DXUZQQkKpg</youtube> |

Video for the conveyor belt element | Video for the conveyor belt element | ||

| − | =ROS code= | + | ===ROS code=== |

def scan_colour(): | def scan_colour(): | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 271.3141, 9.3845, 7.5857, 0, 1) | dType.SetPTPCmdEx(api, 0, 271.3141, 9.3845, 7.5857, 0, 1) | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 1, 1) | dType.SetEndEffectorSuctionCupEx(api, 1, 1) | ||

| + | |||

dType.SetWAITCmdEx(api, 1, 1) | dType.SetWAITCmdEx(api, 1, 1) | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 183.4537, 54.7714, 34.58, 0, 1) | dType.SetPTPCmdEx(api, 0, 183.4537, 54.7714, 34.58, 0, 1) | ||

| + | |||

dType.SetWAITCmdEx(api, 1, 1) | dType.SetWAITCmdEx(api, 1, 1) | ||

| + | |||

if (dType.GetColorSensorEx(api, 0)) == 1: | if (dType.GetColorSensorEx(api, 0)) == 1: | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 175.4124, (-252.8005), (-29.5195), 0, 1) | dType.SetPTPCmdEx(api, 0, 175.4124, (-252.8005), (-29.5195), 0, 1) | ||

| + | |||

print('red') | print('red') | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 0, 1) | dType.SetEndEffectorSuctionCupEx(api, 0, 1) | ||

| + | |||

elif (dType.GetColorSensorEx(api, 2)) == 1: | elif (dType.GetColorSensorEx(api, 2)) == 1: | ||

| + | |||

dType.SetPTPCmdEx(api, 0, (-29.6333), (-267.2832), (-29.436), 0, 1) | dType.SetPTPCmdEx(api, 0, (-29.6333), (-267.2832), (-29.436), 0, 1) | ||

| + | |||

print('blue') | print('blue') | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 0, 1) | dType.SetEndEffectorSuctionCupEx(api, 0, 1) | ||

| + | |||

elif (dType.GetColorSensorEx(api, 1)) == 1: | elif (dType.GetColorSensorEx(api, 1)) == 1: | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 20.7189, (-263.2587), (-28.5473), 0, 1) | dType.SetPTPCmdEx(api, 0, 20.7189, (-263.2587), (-28.5473), 0, 1) | ||

| + | |||

print('green') | print('green') | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 0, 1) | dType.SetEndEffectorSuctionCupEx(api, 0, 1) | ||

| + | |||

else: | else: | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 101.2377, (-263.2587), (-31.5513), 0, 1) | dType.SetPTPCmdEx(api, 0, 101.2377, (-263.2587), (-31.5513), 0, 1) | ||

| + | |||

print('other') | print('other') | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 0, 1) | dType.SetEndEffectorSuctionCupEx(api, 0, 1) | ||

| + | |||

for count in range(3): | for count in range(3): | ||

| + | |||

dType.SetPTPJointParamsEx(api,50,50,50,50,50,50,50,50,1) | dType.SetPTPJointParamsEx(api,50,50,50,50,50,50,50,50,1) | ||

| + | |||

dType.SetEndEffectorParamsEx(api, 59.7, 0, 0, 1) | dType.SetEndEffectorParamsEx(api, 59.7, 0, 0, 1) | ||

| + | |||

dType.SetPTPJumpParamsEx(api,20,100,1) | dType.SetPTPJumpParamsEx(api,20,100,1) | ||

| + | |||

dType.SetInfraredSensor(api, 1 ,2, 1) | dType.SetInfraredSensor(api, 1 ,2, 1) | ||

| + | |||

dType.SetColorSensor(api, 1 ,1, 1) | dType.SetColorSensor(api, 1 ,1, 1) | ||

| + | |||

dType.SetEndEffectorSuctionCupEx(api, 0, 1) | dType.SetEndEffectorSuctionCupEx(api, 0, 1) | ||

| + | |||

dType.SetPTPCmdEx(api, 0, 200, 0, 40, 0, 1) | dType.SetPTPCmdEx(api, 0, 200, 0, 40, 0, 1) | ||

| + | |||

STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0 | STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0 | ||

| + | |||

MM_PER_CRICLE = 3.1415926535898 * 36.0 | MM_PER_CRICLE = 3.1415926535898 * 36.0 | ||

| + | |||

vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE | vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE | ||

| + | |||

dType.SetEMotorEx(api, 0, 0, int(vel), 1) | dType.SetEMotorEx(api, 0, 0, int(vel), 1) | ||

| + | |||

dType.SetWAITCmdEx(api, 3, 1) | dType.SetWAITCmdEx(api, 3, 1) | ||

| + | |||

while not (dType.GetInfraredSensor(api, 2)[0]) == 1: | while not (dType.GetInfraredSensor(api, 2)[0]) == 1: | ||

| + | |||

dType.SetEMotorEx(api, 0, 1, 10000, 1) | dType.SetEMotorEx(api, 0, 1, 10000, 1) | ||

| + | |||

STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0 | STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0 | ||

| + | |||

MM_PER_CRICLE = 3.1415926535898 * 36.0 | MM_PER_CRICLE = 3.1415926535898 * 36.0 | ||

| + | |||

vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE | vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE | ||

| + | |||

dType.SetEMotorEx(api, 0, 0, int(vel), 1) | dType.SetEMotorEx(api, 0, 0, int(vel), 1) | ||

| + | |||

scan_colour() | scan_colour() | ||

Latest revision as of 13:15, 15 January 2024

Contents

[hide]SORTING BRICKS BY COLOUR USING THE NIRYO ARM BY JOSEPH TOWNEND

The goal of the project

The goal of my project was to build a robotic arm that would grab items off of a plate, and place them into containers depending on their colour. This program will be developed with the intention to be used in every day robotics tasks, creating automation that can be utilised in daily life and in production methods to reduce time and labour.

Project details

The system uses a Niryo One robot arm to identify and pick up bricks based on their colours. It uses a camera to recognize different colours. Once the colours are identified, the robot arm moves to grab the bricks from the workstation using a grabber module and delivers them to a redetermined area. This system will run until the workspace is empty, which is identified by the camera.

Problems in the project

The main problems that were encountered were software. To begin with, the software didn't respond well to the camera module on the robotic arm. This was resolved by re-installing the Niryo software, which resulted in the camera becoming available for initialisation. Another problem that was encountered was the lighting. Due to external lighting, sometimes the blocks were missed by the robot arm due to the glare that the blocks were projecting. This resulted in the robot not detecting the blocks as the colour that they are, resulting in the blocks being skipped on cycles of the code. Another issue was the orientation of some bricks. The gripper failed to account for slightly horizontal bricks, resulting in a few failures to collect.

Conclusion

In conclusion, this project was successful at the task of sorting Lego bricks by their colour. It could identify the colours on a regular basis, delivering the bricks into the predefined locations that they belonged. It all happened at a sufficient speed to ensure the automisation could be maintained as a viable alternative than human work.

Further developments

Further developments that this project can undertake include the following:

- Incorporate additional elements in the hardware to enable more automation. For example, have objects arriving on a conveyor belt that will stop when a UV light sensor is disturbed. This would allow for an endless amount of objects to be collected at a steady pace.

- Create a method for aligning all of the objects so that they can be picked up effectively by a grabber. For example, if this was incorporated on the conveyor belt, then the objects could be filtered into the desired orientation before the grabber reaches them.

- Limit the amount of light that the objects are exposed to. For example, this could be achieved by creating an enclosed environment that doesn't have background light, and a more sensitive light can be added to the head of the arm. This would reduce angle glare, thus allowing the camera to correctly identify the colour of the object.

- Produce the program using ROS programming language. This would be effective because it allows for more modularity and flexibility in the code. It also allows more processes to happen.

Photos and code

Find below the code block and a video of the arm operating.

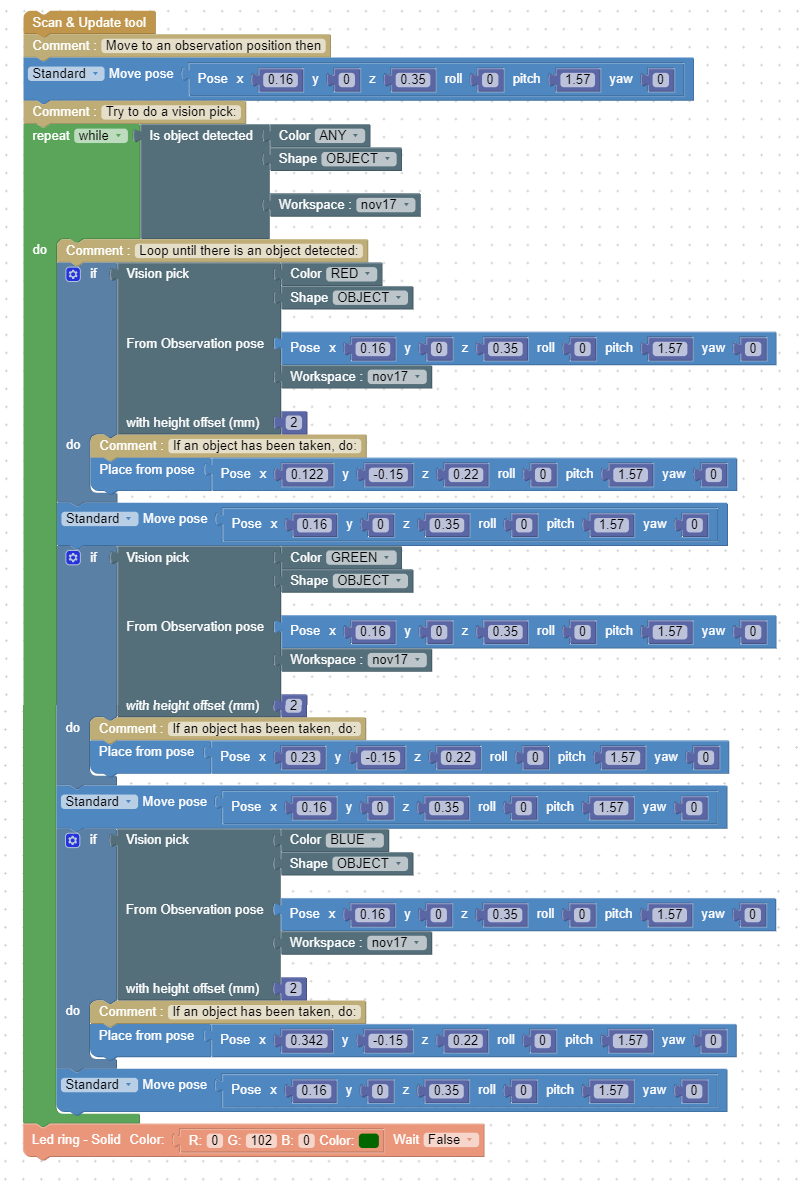

Code block for the colour sorting algorithm

Video of the code in operation

Further developments added

Added to this project is one of the proposed edits to the project that I have recently added. It comprises of a Dobot taking items off of a conveyor belt that stops when a UV sensor is disrupted. It then takes the blocks and checks the colour and sorts them. The code was also published in ROS programming language

Video for the conveyor belt element

ROS code

def scan_colour():

dType.SetPTPCmdEx(api, 0, 271.3141, 9.3845, 7.5857, 0, 1)

dType.SetEndEffectorSuctionCupEx(api, 1, 1)

dType.SetWAITCmdEx(api, 1, 1)

dType.SetPTPCmdEx(api, 0, 183.4537, 54.7714, 34.58, 0, 1)

dType.SetWAITCmdEx(api, 1, 1)

if (dType.GetColorSensorEx(api, 0)) == 1:

dType.SetPTPCmdEx(api, 0, 175.4124, (-252.8005), (-29.5195), 0, 1)

print('red')

dType.SetEndEffectorSuctionCupEx(api, 0, 1)

elif (dType.GetColorSensorEx(api, 2)) == 1:

dType.SetPTPCmdEx(api, 0, (-29.6333), (-267.2832), (-29.436), 0, 1)

print('blue')

dType.SetEndEffectorSuctionCupEx(api, 0, 1)

elif (dType.GetColorSensorEx(api, 1)) == 1:

dType.SetPTPCmdEx(api, 0, 20.7189, (-263.2587), (-28.5473), 0, 1)

print('green')

dType.SetEndEffectorSuctionCupEx(api, 0, 1)

else:

dType.SetPTPCmdEx(api, 0, 101.2377, (-263.2587), (-31.5513), 0, 1)

print('other')

dType.SetEndEffectorSuctionCupEx(api, 0, 1)

for count in range(3):

dType.SetPTPJointParamsEx(api,50,50,50,50,50,50,50,50,1)

dType.SetEndEffectorParamsEx(api, 59.7, 0, 0, 1)

dType.SetPTPJumpParamsEx(api,20,100,1)

dType.SetInfraredSensor(api, 1 ,2, 1)

dType.SetColorSensor(api, 1 ,1, 1)

dType.SetEndEffectorSuctionCupEx(api, 0, 1)

dType.SetPTPCmdEx(api, 0, 200, 0, 40, 0, 1)

STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0

MM_PER_CRICLE = 3.1415926535898 * 36.0

vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE

dType.SetEMotorEx(api, 0, 0, int(vel), 1)

dType.SetWAITCmdEx(api, 3, 1)

while not (dType.GetInfraredSensor(api, 2)[0]) == 1:

dType.SetEMotorEx(api, 0, 1, 10000, 1)

STEP_PER_CRICLE = 360.0 / 1.8 * 10.0 * 16.0

MM_PER_CRICLE = 3.1415926535898 * 36.0

vel = float(0) * STEP_PER_CRICLE / MM_PER_CRICLE

dType.SetEMotorEx(api, 0, 0, int(vel), 1)

scan_colour()