Difference between revisions of "Tello Arrow Navigator - Ibrahim Hayik"

(→Example Pictures) |

(→Example Pictures) |

||

| Line 102: | Line 102: | ||

[[File:drone_photo_7.jpg]] | [[File:drone_photo_7.jpg]] | ||

| + | |||

| + | |||

| + | == Source Code == | ||

Revision as of 16:39, 14 February 2025

Contents

Goal of the Project

The goal of this project is to develop an autonomous system using the DJI Tello drone to detect arrows in a given environment and interpret their direction. This allows the drone to recognize directional instructions visually, which can be used for navigation and automation in robotics applications.

Description of the Project

This project utilizes the djitellopy library to control a DJI Tello drone and OpenCV (cv2) for real-time video processing. The drone streams video, and the system processes each frame to detect arrow shapes and determine their direction. The detected direction is displayed on the screen, allowing for further integration into autonomous movement systems where the drone can follow visual navigation cues.

Additionally, the project includes functionalities for:

- Real-time video streaming from the drone.

- Screenshot capture using the 'c' key.

- Video recording using the 'r' key.

- Detection of arrow direction and visualization of results on the video feed.

🔹 Note: This project detects arrows. There is a code source given below that enables the drone to move according to the detected directions.

Algorithm Description

The project follows these key steps:

1 - Video Stream Capture

- The DJI Tello drone is connected and starts streaming video.

- The frames from the drone are continuously read and processed.

2 - Preprocessing the Image

- The frame is converted to grayscale.

- A binary threshold is applied to distinguish the arrow from the background.

- Morphological operations (closing) are used to reduce noise.

3 - Detecting Arrows in the Image

- Contours are extracted from the processed image.

- The largest contour is selected to filter out noise.

- A convex hull is generated around the contour to find the arrow shape.

4 - Determining the Arrow Direction

- The farthest point from the bounding box center is found.

- The angle between this point and the center is calculated.

- The angle is mapped to one of four directions:

- - 0° to 45° & 315° to 360° → "RIGHT" - - 45° to 135° → "UP" - - 135° to 225° → "LEFT" - - 225° to 315° → "DOWN"

5 - Displaying the Results

- The detected arrow and its direction are overlaid on the video stream.

- The user can see the processed output in real time.

6 - User Controls

- Press 'q' to quit the program.

- Press 'c' to capture an image.

- Press 'r' to start or stop video recording.

This project provides a foundation for autonomous navigation and interactive drone applications, making it useful for robotics, automation, and AI-driven vision tasks.

Example Pictures

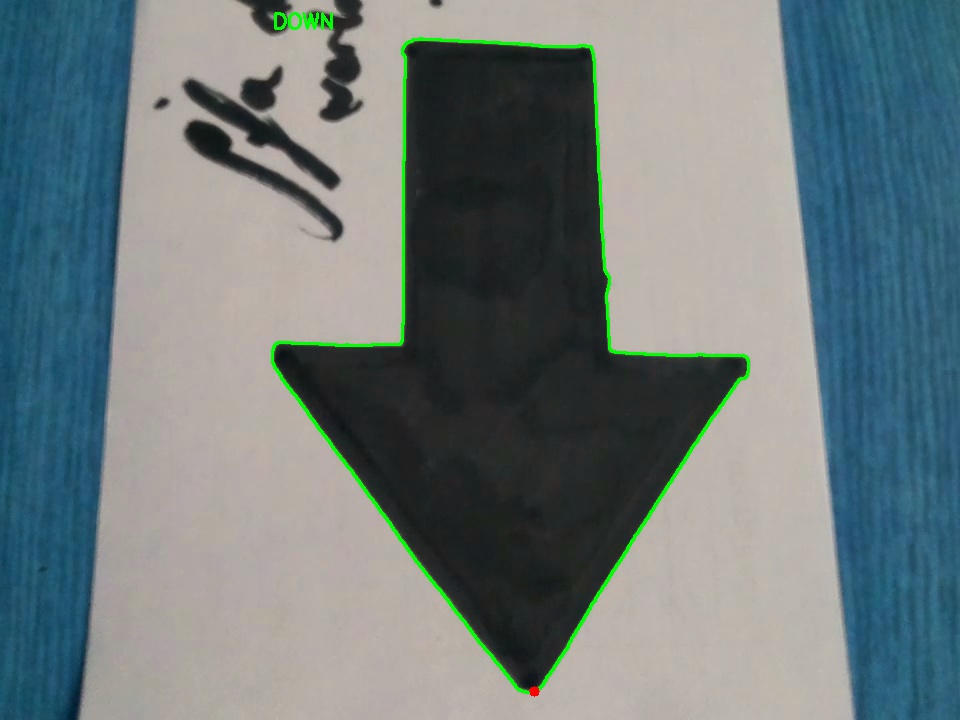

The image shows a detected arrow with a green contour and a red dot marking the farthest point. The system correctly identifies the direction as "DOWN" and displays it in the top-left corner:

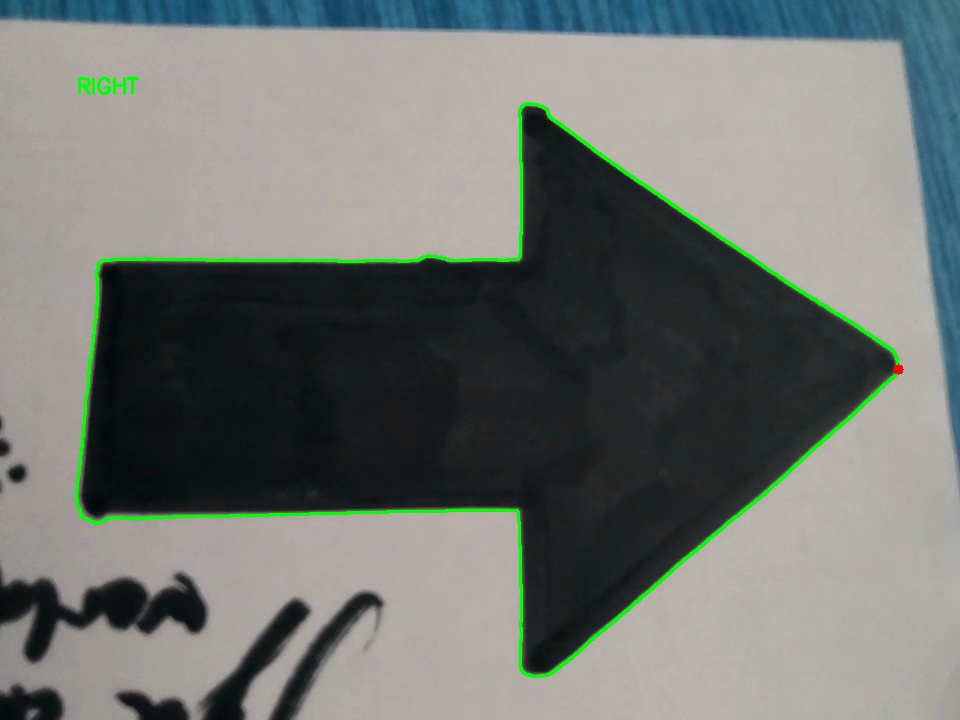

The image shows a detected arrow with a green contour and a red dot marking the farthest point. The system correctly identifies the direction as "RIGHT" and displays it in the top-left corner: