Difference between revisions of "Tello Arrow Navigator - Ibrahim Hayik"

(→Algorithm Description) |

(→Source Code for Detecting Lines) |

||

| (8 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Goal of the Project == | == Goal of the Project == | ||

| − | + | This project focuses on developing autonomous capabilities for the DJI Tello drone through two distinct applications: arrow detection for navigation and line counting for environmental analysis. In the first application, the drone will detect and interpret arrows in a given environment, allowing it to recognize directional instructions for autonomous movement in robotics applications. In the second application, the drone will autonomously fly and analyze its surroundings to detect and count lines drawn on a wall, providing a total line count. Both projects enhance the drone’s ability to process visual information for automation and real-world autonomous operations. | |

| − | == Description of the Project == | + | == Description of the Project (Tello Drone Arrow Navigator) == |

This project utilizes the djitellopy library to control a DJI Tello drone and OpenCV (cv2) for real-time video processing. The drone streams video, and the system processes each frame to detect arrow shapes and determine their direction. The detected direction is displayed on the screen, allowing for further integration into autonomous movement systems where the drone can follow visual navigation cues. | This project utilizes the djitellopy library to control a DJI Tello drone and OpenCV (cv2) for real-time video processing. The drone streams video, and the system processes each frame to detect arrow shapes and determine their direction. The detected direction is displayed on the screen, allowing for further integration into autonomous movement systems where the drone can follow visual navigation cues. | ||

| Line 19: | Line 19: | ||

| + | 🔹 Note: This project detects arrows. There is a code source given below that enables the drone to move according to the detected directions. | ||

| + | |||

| + | == Description of the project (Tello Drone Detecting Lines) == | ||

| + | |||

| + | The project involves programming a Tello drone to autonomously navigate and detect lines drawn on a wall. The primary objective is to enable the drone to identify and count the total number of lines it encounters during its flight. | ||

| + | |||

| + | This requires integrating computer vision algorithms into the drone’s operation to process real-time video feeds and recognize specific line patterns or characteristics. | ||

| + | |||

| + | The final outcome will include: | ||

| + | |||

| + | - Accurate line detection and counting. | ||

| + | |||

| + | - A demonstration of autonomous flight control tailored to the detection process. | ||

| − | + | - Real-time data processing to provide results during or after the flight. | |

| + | |||

| + | This project aims to enhance the understanding of autonomous systems and their potential applications in various domains, such as industrial inspection and pattern recognition. | ||

| − | == Algorithm Description for Arrow | + | == Algorithm Description for Arrow Navigation == |

The project follows these key steps: | The project follows these key steps: | ||

| Line 87: | Line 102: | ||

This project provides a foundation for autonomous navigation and interactive drone applications, making it useful for robotics, automation, and AI-driven vision tasks. | This project provides a foundation for autonomous navigation and interactive drone applications, making it useful for robotics, automation, and AI-driven vision tasks. | ||

| − | |||

== Algorithm Description for Detecting Lines == | == Algorithm Description for Detecting Lines == | ||

| Line 179: | Line 193: | ||

- Graceful Exit: Handles keyboard interrupts and cleans up resources (e.g., stopping the video stream and disconnecting the drone). | - Graceful Exit: Handles keyboard interrupts and cleans up resources (e.g., stopping the video stream and disconnecting the drone). | ||

| − | == Example Pictures == | + | == Example Pictures for Arrow Navigator == |

| Line 194: | Line 208: | ||

| − | == Source Code == | + | == Example Pictures for Detecting Lines == |

| + | |||

| + | The pictures below are named using the format File:Drone_20250115-161129_(?), where the (?) indicates the type of frame—either binary or processed—based on the descriptions provided below. | ||

| + | |||

| + | |||

| + | The terms "binary frame" and "processed frame" refer to specific stages of the image processing pipeline: | ||

| + | |||

| + | |||

| + | Binary Frame: | ||

| + | |||

| + | |||

| + | Definition: A binary frame is a simplified version of the image, where each pixel is either black (0) or white (255). This is achieved using a technique called thresholding, which | ||

| + | converts all pixel values above a certain threshold to white and all values below it to black. | ||

| + | |||

| + | |||

| + | Purpose: Binary frames are used to highlight significant features (like edges) in the image and eliminate unnecessary details such as colors and gradients. | ||

| + | |||

| + | |||

| + | How it's used: It simplifies the process of detecting lines by focusing on high-contrast regions in the image. | ||

| + | |||

| + | |||

| + | Processed Frame: | ||

| + | |||

| + | |||

| + | Definition: The processed frame is the original video frame with additional visual information, such as lines detected by the Hough Line Transform. It retains the original colors and details but overlays the detected features (e.g., lines drawn in green). | ||

| + | |||

| + | |||

| + | Purpose: This frame is used for visualization, helping the user see how the algorithm interprets and detects features within the original video feed. | ||

| + | |||

| + | |||

| + | How it's used: It provides feedback to the user, showing the real-time results of the line detection algorithm. | ||

| + | |||

| + | |||

| + | Why Are They Important? | ||

| + | |||

| + | - The binary frame is crucial for the computational process of detecting lines, as it isolates the essential structures in the image. | ||

| + | |||

| + | - The processed frame allows users to visually confirm and understand how the detected features align with the real-world scene captured by the drone. | ||

| + | |||

| + | |||

| + | Each photo is an example whether its binary frame or processed frame: | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161129_BINARY_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161129_PROCESSED_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161134_BINARY_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161134_PROCESSED_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161138_BINARY_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161138_PROCESSED_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161141_BINARY_FRAME.jpg]] | ||

| + | |||

| + | |||

| + | [[File:Drone_20250115-161141_PROCESSED_FRAME.jpg]] | ||

| + | |||

| + | == Source Code for Arrow Navigator == | ||

Code source for detecting the arrows and directions: | Code source for detecting the arrows and directions: | ||

| Line 203: | Line 283: | ||

[[Media:Test_1.zip]] | [[Media:Test_1.zip]] | ||

| + | |||

| + | == Source Code for Detecting Lines == | ||

| + | |||

| + | Download zip file (Python code source): | ||

| + | |||

| + | - [[File:Test_2.zip]] | ||

| + | |||

| + | == Video Sources == | ||

| + | |||

| + | Videos demonstrating autonomous flight, line detection, recognition, and counting functionality: | ||

| + | |||

| + | video 1: [https://youtube.com/shorts/GlFybmGehpI?feature=share TELLO DRONE VIDEO-1] | ||

| + | |||

| + | video 2 : [https://youtu.be/rNqbnIgl4oE TELLO DRONE VIDEO-2] | ||

| + | |||

| + | video 3 : [https://youtu.be/McAZiWBhnM4 TELLO DRONE VIDEO-3] | ||

Latest revision as of 12:02, 23 February 2025

Contents

- 1 Goal of the Project

- 2 Description of the Project (Tello Drone Arrow Navigator)

- 3 Description of the project (Tello Drone Detecting Lines)

- 4 Algorithm Description for Arrow Navigation

- 5 Algorithm Description for Detecting Lines

- 6 Example Pictures for Arrow Navigator

- 7 Example Pictures for Detecting Lines

- 8 Source Code for Arrow Navigator

- 9 Source Code for Detecting Lines

- 10 Video Sources

Goal of the Project

This project focuses on developing autonomous capabilities for the DJI Tello drone through two distinct applications: arrow detection for navigation and line counting for environmental analysis. In the first application, the drone will detect and interpret arrows in a given environment, allowing it to recognize directional instructions for autonomous movement in robotics applications. In the second application, the drone will autonomously fly and analyze its surroundings to detect and count lines drawn on a wall, providing a total line count. Both projects enhance the drone’s ability to process visual information for automation and real-world autonomous operations.

This project utilizes the djitellopy library to control a DJI Tello drone and OpenCV (cv2) for real-time video processing. The drone streams video, and the system processes each frame to detect arrow shapes and determine their direction. The detected direction is displayed on the screen, allowing for further integration into autonomous movement systems where the drone can follow visual navigation cues.

Additionally, the project includes functionalities for:

- Real-time video streaming from the drone.

- Screenshot capture using the 'c' key.

- Video recording using the 'r' key.

- Detection of arrow direction and visualization of results on the video feed.

🔹 Note: This project detects arrows. There is a code source given below that enables the drone to move according to the detected directions.

Description of the project (Tello Drone Detecting Lines)

The project involves programming a Tello drone to autonomously navigate and detect lines drawn on a wall. The primary objective is to enable the drone to identify and count the total number of lines it encounters during its flight.

This requires integrating computer vision algorithms into the drone’s operation to process real-time video feeds and recognize specific line patterns or characteristics.

The final outcome will include:

- Accurate line detection and counting.

- A demonstration of autonomous flight control tailored to the detection process.

- Real-time data processing to provide results during or after the flight.

This project aims to enhance the understanding of autonomous systems and their potential applications in various domains, such as industrial inspection and pattern recognition.

The project follows these key steps:

1 - Video Stream Capture

- The DJI Tello drone is connected and starts streaming video.

- The frames from the drone are continuously read and processed.

2 - Preprocessing the Image

- The frame is converted to grayscale.

- A binary threshold is applied to distinguish the arrow from the background.

- Morphological operations (closing) are used to reduce noise.

3 - Detecting Arrows in the Image

- Contours are extracted from the processed image.

- The largest contour is selected to filter out noise.

- A convex hull is generated around the contour to find the arrow shape.

4 - Determining the Arrow Direction

- The farthest point from the bounding box center is found.

- The angle between this point and the center is calculated.

- The angle is mapped to one of four directions:

- 0° to 45° & 315° to 360° → "RIGHT" - 45° to 135° → "UP" - 135° to 225° → "LEFT" - 225° to 315° → "DOWN"

5 - Displaying the Results

- The detected arrow and its direction are overlaid on the video stream.

- The user can see the processed output in real time.

6 - User Controls

- Press 'q' to quit the program.

- Press 'c' to capture an image.

- Press 'r' to start or stop video recording.

This project provides a foundation for autonomous navigation and interactive drone applications, making it useful for robotics, automation, and AI-driven vision tasks.

Algorithm Description for Detecting Lines

1. Line Detection (detecting_lines function)

Purpose: Processes a single video frame to detect and highlight lines.

Steps:

- Grayscale Conversion: Converts the frame to grayscale for easier processing.

- Binary Thresholding: Converts the grayscale image into a binary image (black and white) to highlight features.

- Edge Detection: Applies the Canny edge detection algorithm to find edges in the binary image.

- Dilation: Thickens the detected edges to make line detection more robust.

- Line Detection: Uses the Hough Line Transform (cv2.HoughLinesP) to detect straight lines in the processed image.

- Drawing Lines: Draws the detected lines on the original frame in green for visualization.

Output:

- The original frame with detected lines drawn on it.

- The binary edge-detected frame for debugging or further analysis.

2. Tello Drone Video Processing (tello_visualization_with_photo function)

Purpose: Streams video from the Tello drone, processes it for line detection, and allows the user to interact with the feed.

Features:

- Live Video Stream: Continuously fetches and displays the video feed from the drone.

- Line Detection: Applies the detecting_lines function to the video stream in real-time.

Photo Capture:

- Press 'c' to capture and save the processed frame with lines highlighted.

- Press 'v' to capture and save the raw, unprocessed frame.

- Emergency Stop: Press 'e' to land the drone immediately in case of issues.

- Battery Monitoring: Automatically lands the drone if the battery level drops below a specified threshold.

3. Drone Movements (drone_movements function)

Purpose: Demonstrates basic autonomous drone movements.

Actions:

- Takeoff: The drone takes off and gains altitude.

- Move Up: The drone moves up to a specified height (110 cm in this case).

- Landing: The drone lands after a delay.

- Note: Additional movements like forward motion and rotations are commented out but can be included.

4. Main Control Flow (main function)

Purpose: Coordinates the initialization and execution of video processing and drone movements.

Steps:

- Drone Initialization: Connects to the Tello drone and starts video streaming.

- Threading: Uses two threads:

- One for video streaming and line detection.

- Another for executing drone movements simultaneously.

- Graceful Exit: Handles keyboard interrupts and cleans up resources (e.g., stopping the video stream and disconnecting the drone).

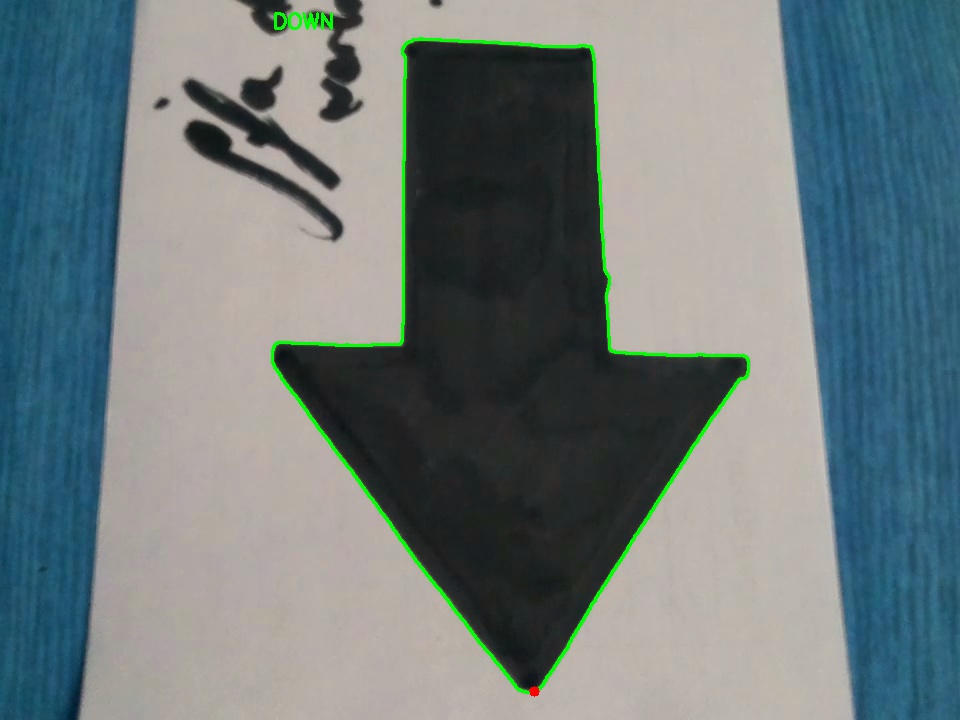

The image shows a detected arrow with a green contour and a red dot marking the farthest point. The system correctly identifies the direction as "DOWN" and displays it in the top-left corner:

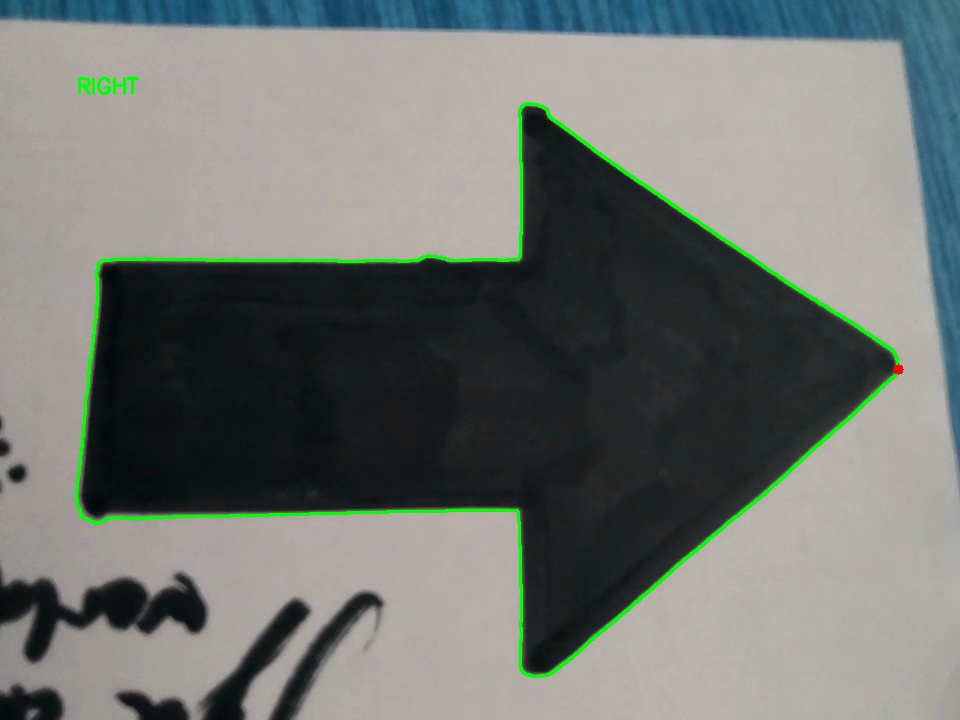

The image shows a detected arrow with a green contour and a red dot marking the farthest point. The system correctly identifies the direction as "RIGHT" and displays it in the top-left corner:

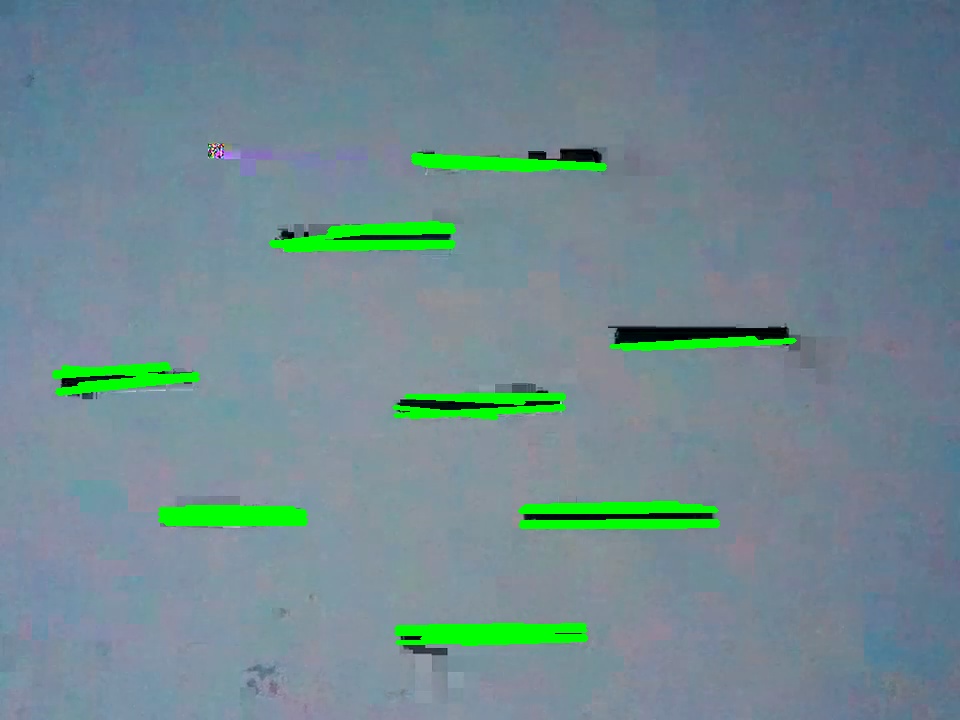

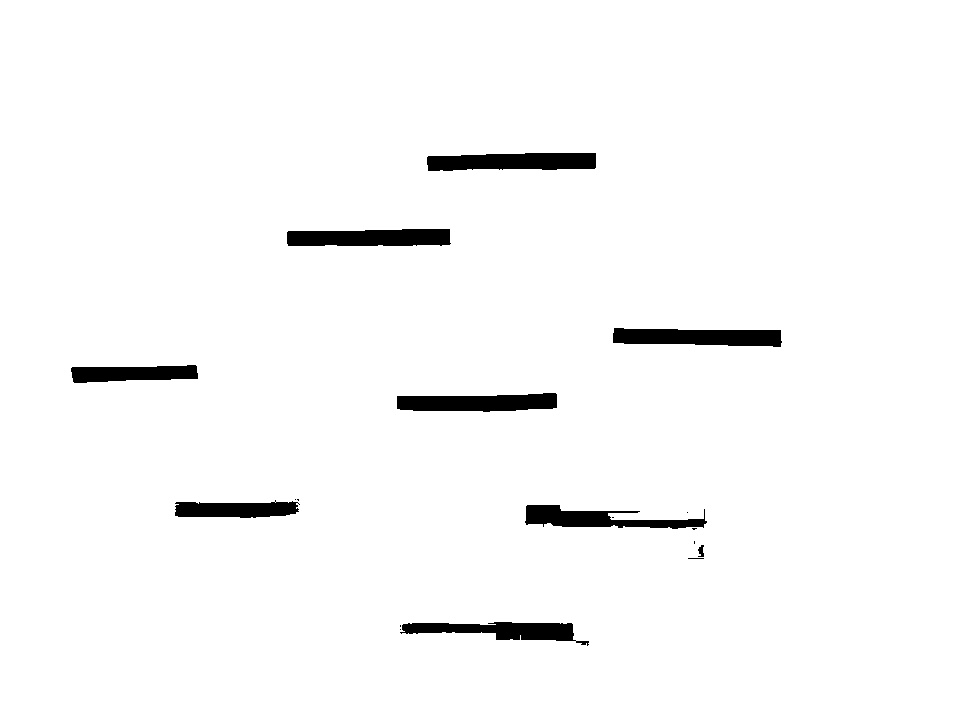

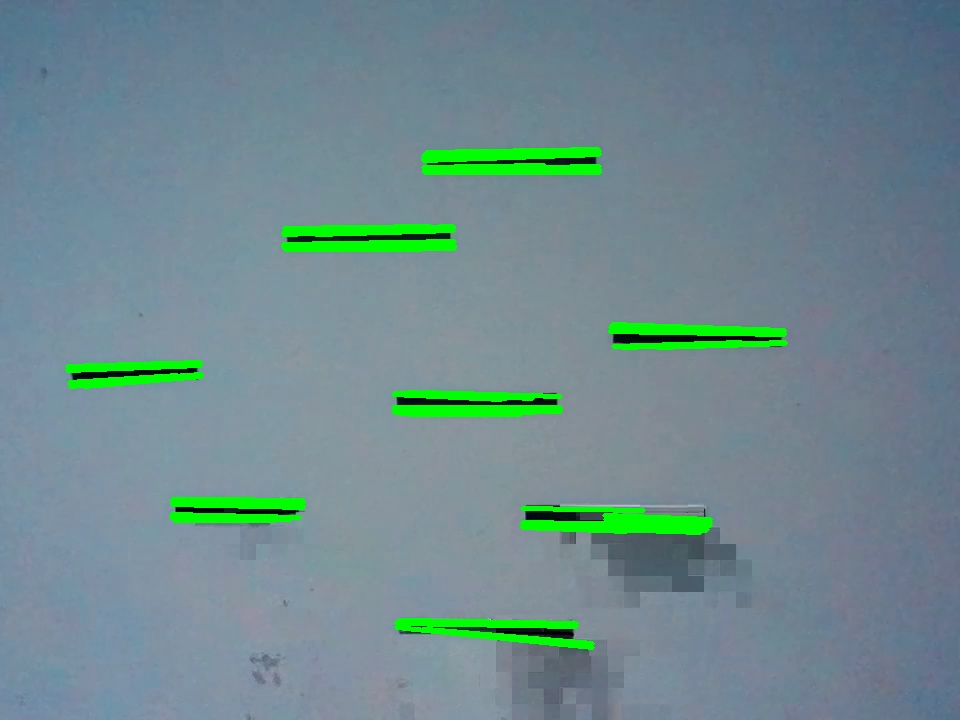

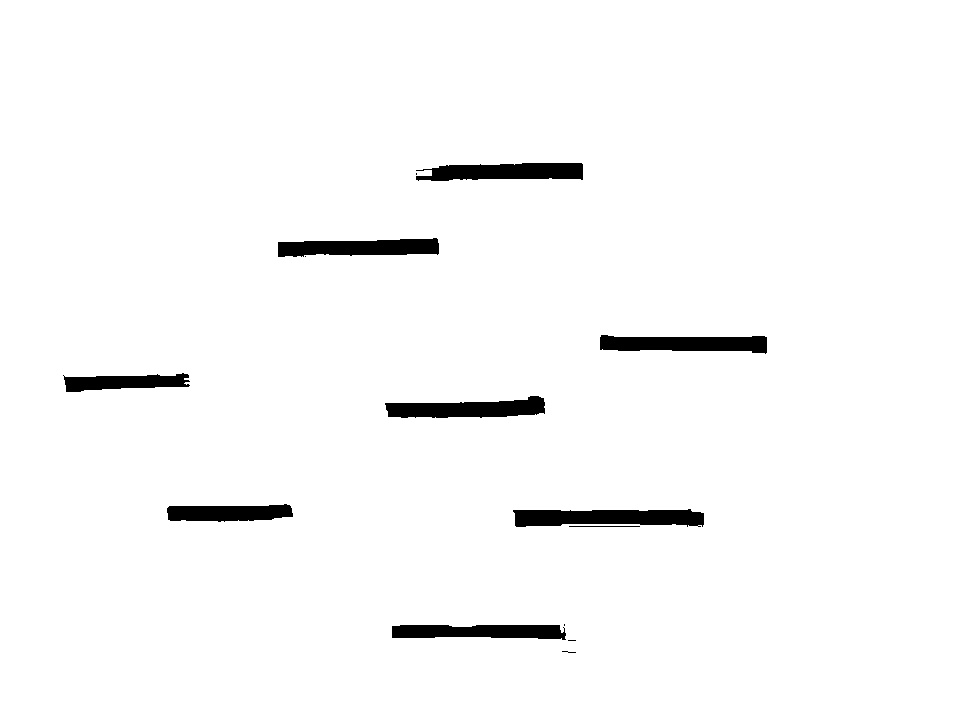

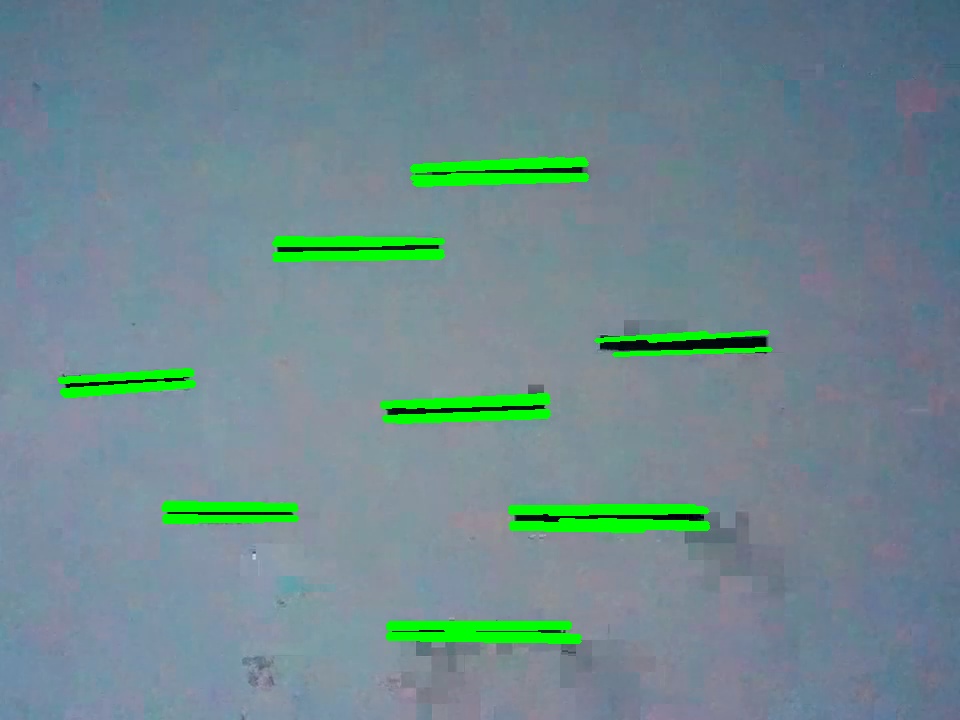

Example Pictures for Detecting Lines

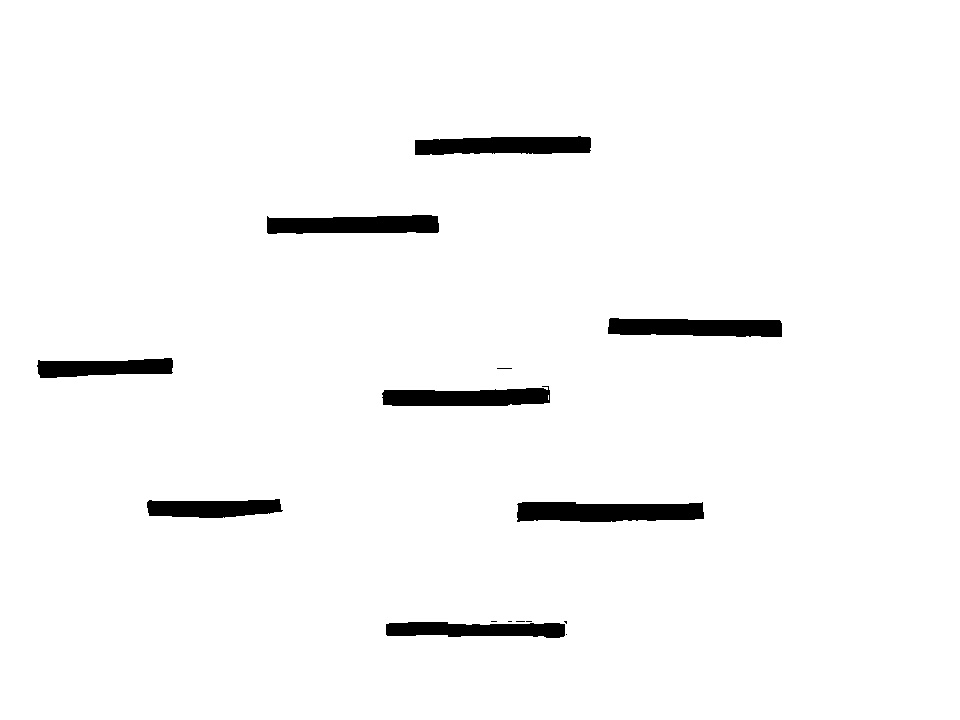

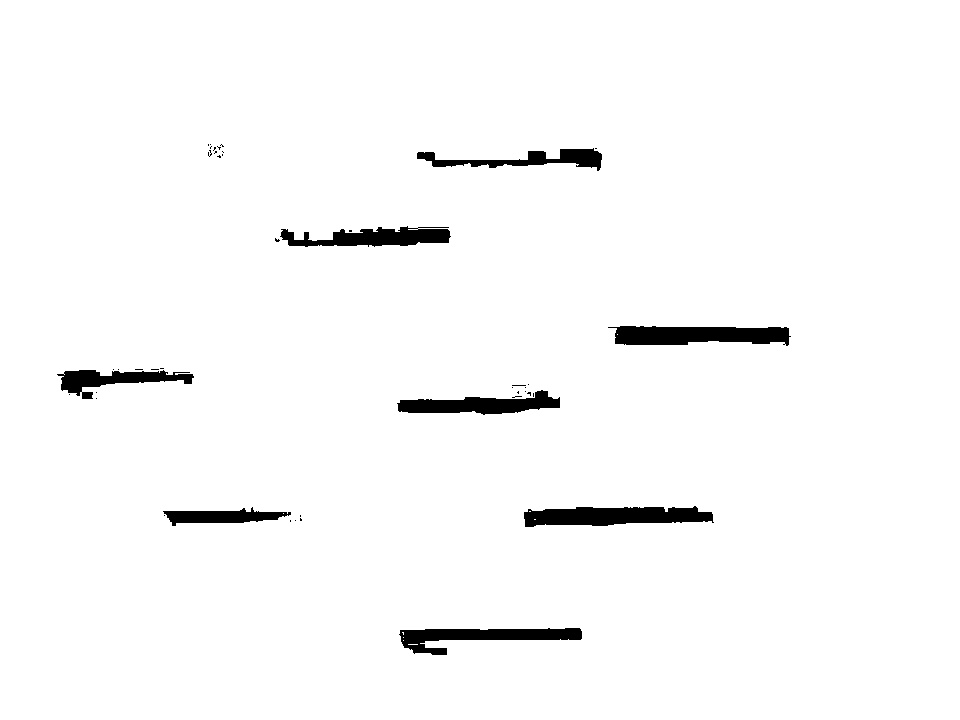

The pictures below are named using the format File:Drone_20250115-161129_(?), where the (?) indicates the type of frame—either binary or processed—based on the descriptions provided below.

The terms "binary frame" and "processed frame" refer to specific stages of the image processing pipeline:

Binary Frame:

Definition: A binary frame is a simplified version of the image, where each pixel is either black (0) or white (255). This is achieved using a technique called thresholding, which

converts all pixel values above a certain threshold to white and all values below it to black.

Purpose: Binary frames are used to highlight significant features (like edges) in the image and eliminate unnecessary details such as colors and gradients.

How it's used: It simplifies the process of detecting lines by focusing on high-contrast regions in the image.

Processed Frame:

Definition: The processed frame is the original video frame with additional visual information, such as lines detected by the Hough Line Transform. It retains the original colors and details but overlays the detected features (e.g., lines drawn in green).

Purpose: This frame is used for visualization, helping the user see how the algorithm interprets and detects features within the original video feed.

How it's used: It provides feedback to the user, showing the real-time results of the line detection algorithm.

Why Are They Important?

- The binary frame is crucial for the computational process of detecting lines, as it isolates the essential structures in the image.

- The processed frame allows users to visually confirm and understand how the detected features align with the real-world scene captured by the drone.

Each photo is an example whether its binary frame or processed frame:

Code source for detecting the arrows and directions:

Code source for detecting the arrows/directions and making movements according:

Source Code for Detecting Lines

Download zip file (Python code source):

Video Sources

Videos demonstrating autonomous flight, line detection, recognition, and counting functionality:

video 1: TELLO DRONE VIDEO-1

video 2 : TELLO DRONE VIDEO-2

video 3 : TELLO DRONE VIDEO-3