Difference between revisions of "Avoiding in a corridor"

(→Source Code) |

(→Results & Conclusion) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 23: | Line 23: | ||

| + | Note: The final result doesn't implement Reinforcement Learning. | ||

== Source Code == | == Source Code == | ||

| − | [[ | + | [[Media:ProjectoAI.zip|RoboticProject (zip)]] |

== Material used == | == Material used == | ||

| Line 49: | Line 50: | ||

== Videos== | == Videos== | ||

| + | |||

| + | [[Media:20120622_1123_05.avi|video demo]] | ||

== Links == | == Links == | ||

Latest revision as of 10:33, 22 June 2012

Authors: Frederic Arendt, Tiago Ferreira, Frederico Sousa

Contents

About project

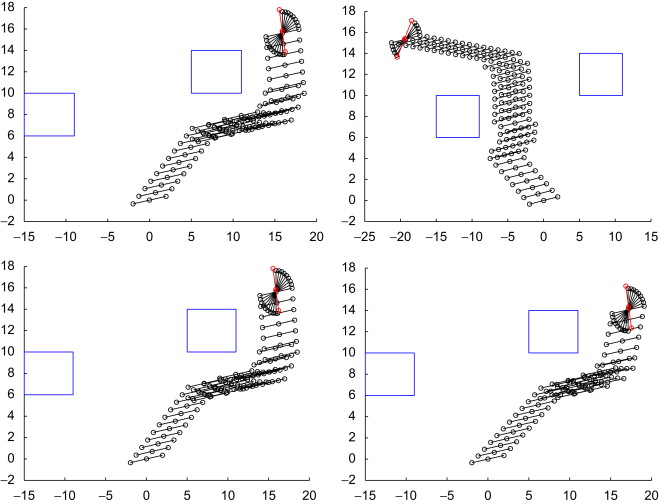

The goal of this project is to make two robots go through a corridor at the same time, until they face each other. Having one of them find a free slot where he can wait until the other robot passes and the way is clear. For that effect we will use a Reinforcement Learning algorithm.

Reinforcement learning algorithm (Q-Learning)

Reinforcement learning is a learning paradigm concerned with learning to control a system so as to maximize a numerical performance measure that expresses a long-term objective. The goal in reinforcement learning is to develop efficient learning algorithms, as well as to understand the algorithms' merits and limitations. Reinforcement learning is of great interest because of the large number of practical applications that it can be used to address, ranging from problems in artificial intelligence to operations research or control engineering.

Q-Learning Algorithm

Q-learning is a reinforcement learning technique that works by learning an action-value function that gives the expected utility of taking a given action in a given state and following a fixed policy thereafter. One of the strengths of Q-learning is that it is able to compare the expected utility of the available actions without requiring a model of the environment. A recent variation called delayed Q-learning has shown substantial improvements, bringing Probably approximately correct learning (PAC) bounds to Markov decision processes.

Results & Conclusion

Note: The final result doesn't implement Reinforcement Learning.

Source Code

Material used

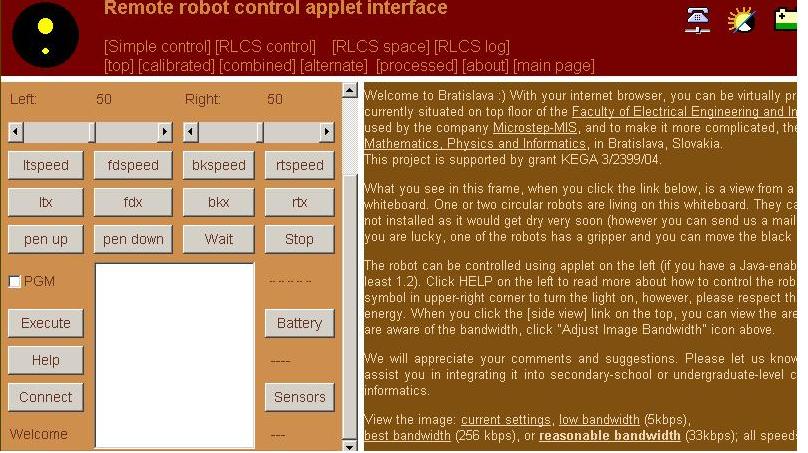

Virtual Robotics Laboratory

Eclipse Developer & Java Language