Difference between revisions of "Simulacia POMDP"

From RoboWiki

| Line 6: | Line 6: | ||

This project can be run with either Python 2.7 or higher. Python 3.x is also supported. | This project can be run with either Python 2.7 or higher. Python 3.x is also supported. | ||

Another requirement is installed [http://matplotlib.org/ Matplotlib] module for value function plotting. | Another requirement is installed [http://matplotlib.org/ Matplotlib] module for value function plotting. | ||

| + | |||

| + | |||

| + | == Parameters of the example == | ||

| + | The simulated scenario consists of an robot agent with two possible states and three actions, two of which are terminal actions (can be taken only once). Each terminal action has some associated reward, depending on the state of the agent. | ||

| + | |||

| + | [[Image:Príklad.jpg]] | ||

Revision as of 20:54, 4 July 2016

Project objective

The goal of this project was to create a program for computation and evaluation of POMDP algorithm on simple two-state scenario. More precisely, my script computes piecewise linear value function over belief space for a given time horizon, then this result is used in a simulation of an agent with incomplete state awareness.

Requirements

This project can be run with either Python 2.7 or higher. Python 3.x is also supported. Another requirement is installed Matplotlib module for value function plotting.

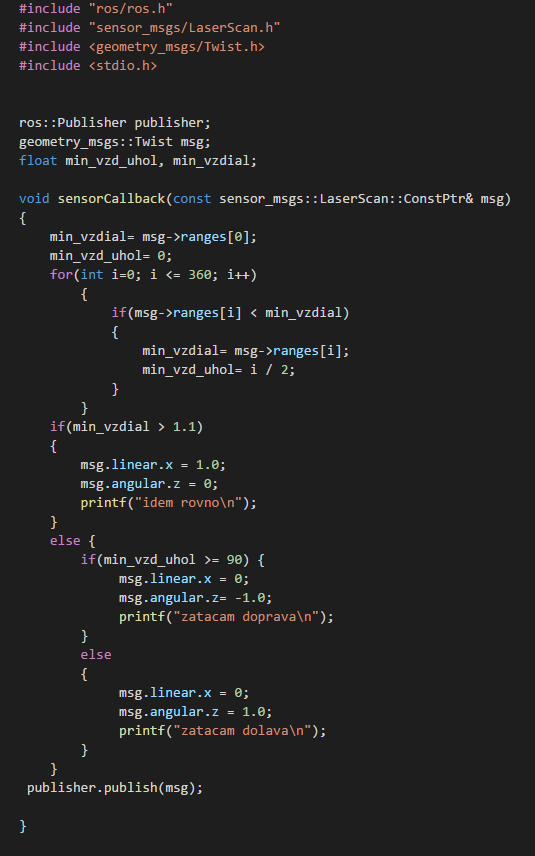

Parameters of the example

The simulated scenario consists of an robot agent with two possible states and three actions, two of which are terminal actions (can be taken only once). Each terminal action has some associated reward, depending on the state of the agent.