Difference between revisions of "Simulacia POMDP"

| Line 13: | Line 13: | ||

[[Image:Pomdp_example_cut.png|700px]] | [[Image:Pomdp_example_cut.png|700px]] | ||

| − | The actions ''u1'' and ''u2'' are terminal actions. <br> | + | The actions ''u1'' and ''u2'' are terminal actions with immediate payoffs. <br> |

| − | The action ''u3'' is a sensing action that potentially leads to a state transition. <br> | + | The action ''u3'' is a sensing action that potentially leads to a state transition.It costs -1. <br> |

The horizon is finite and γ=1. | The horizon is finite and γ=1. | ||

| + | In POMDP agent does not have certain knowledge about state, only belief. In this example, we have two-state belief that can be held by one variable. If '''belief''' = 0, agent is certain that he is in state x2, if '''belief''' is 1, he is certainly in state x1. | ||

| + | Moreover, action '''u3''' can potentially lead to state transition with probability 0.8. | ||

| + | For more information about used algorithm, see [http://dai.fmph.uniba.sk/courses/airob/sl/airob9.pdf] | ||

| + | |||

| + | |||

| + | == Source code == | ||

| + | Zipped folder containing the [[Media:pomdp_source.zip|project source code]]. | ||

== Results == | == Results == | ||

| + | Horizon 10 value vector | ||

[[Image:Horizon10.png|700px]] | [[Image:Horizon10.png|700px]] | ||

| + | |||

| + | This image graphically represents horizon 10 piecewise linear value function computed by this program. | ||

| + | Average reward obtained by simulated agent using this value function is here plotted against starting belief: | ||

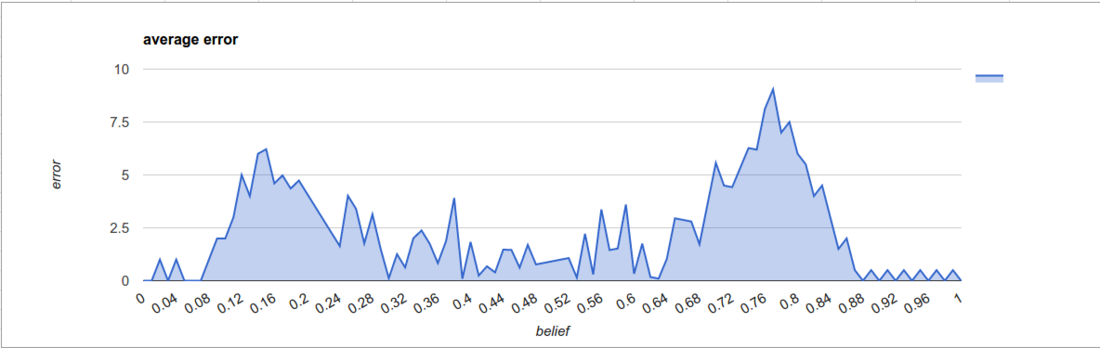

Results of simulation with horizon=10 | Results of simulation with horizon=10 | ||

[[Image:pomdp_graph.png | 1100px]] | [[Image:pomdp_graph.png | 1100px]] | ||

| + | We can see that prediction error is worse near kinks in our value function. | ||

| + | But prediction error rates do not change that much with changing horizon length, except from horizon 1, where robot is not allowed any sensing action. | ||

[[Image:Pomdp_errors.png | 900px]] | [[Image:Pomdp_errors.png | 900px]] | ||

| + | |||

| + | [[Image:Rewards.png | 900px]] | ||

Revision as of 01:18, 5 July 2016

Project objective

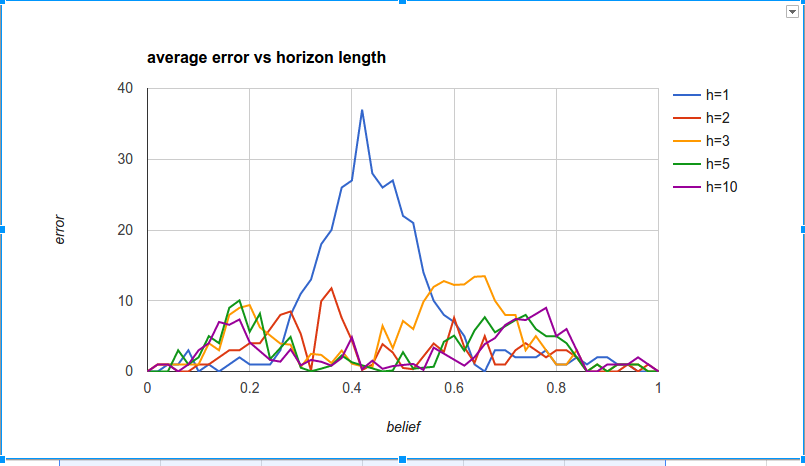

The goal of this project was to create a program for computation and evaluation of POMDP algorithm on simple two-state scenario. More precisely, my script computes piecewise linear value function over belief space for a given time horizon, then this result is used in a simulation of an agent with incomplete state awareness.

Requirements

This project can be run with either Python 2.7 or higher. Python 3.x is also supported. Another requirement is installed Matplotlib module for value function plotting.

Parameters of the example

The simulated scenario consists of an robot agent with two possible states and three actions, two of which are terminal actions (can be taken only once). Each terminal action has some associated reward, depending on the state of the agent.

The actions u1 and u2 are terminal actions with immediate payoffs.

The action u3 is a sensing action that potentially leads to a state transition.It costs -1.

The horizon is finite and γ=1.

In POMDP agent does not have certain knowledge about state, only belief. In this example, we have two-state belief that can be held by one variable. If belief = 0, agent is certain that he is in state x2, if belief is 1, he is certainly in state x1.

Moreover, action u3 can potentially lead to state transition with probability 0.8.

For more information about used algorithm, see [1]

Source code

Zipped folder containing the project source code.

Results

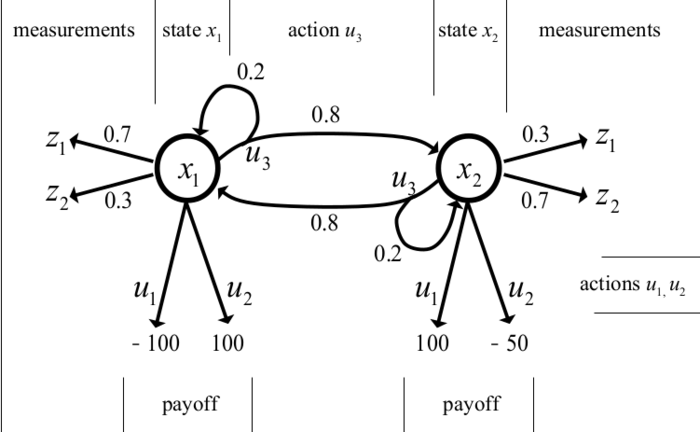

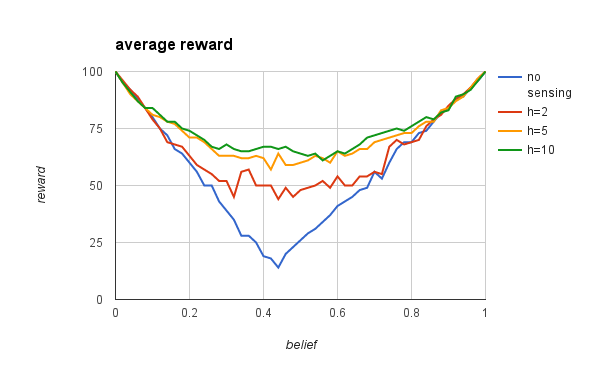

This image graphically represents horizon 10 piecewise linear value function computed by this program. Average reward obtained by simulated agent using this value function is here plotted against starting belief:

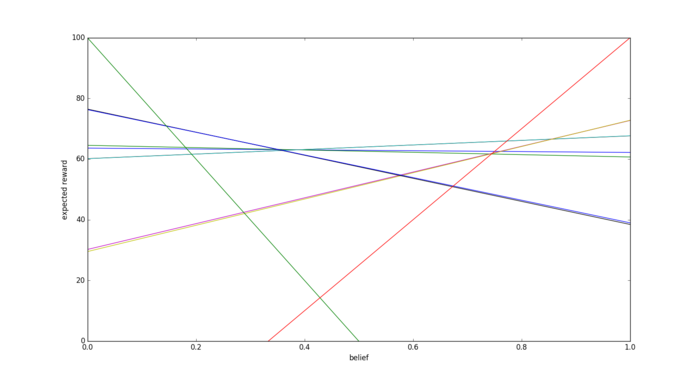

Results of simulation with horizon=10

We can see that prediction error is worse near kinks in our value function.

But prediction error rates do not change that much with changing horizon length, except from horizon 1, where robot is not allowed any sensing action.