LCS in context of ML- Martin Mihál

Contents

Goal of project

Goal of the project is show possibilities of LCS algorithm in non-robotic enviroment - in area of - we can say - "pure" machine learning. I'll use data of activity of user of given website(http://www.publico.es/) from Piano(http://piano.io/) system and the goal of whole process will be define set of rules which best define users which will come back to website - that means they are "loyal" in some way and we can try to target them with locking of some content and deliver them offer. On the other site, users which are not loyal yet(they won't probablycoma back) - we want to give them freedom in browsing of given website and and develop interest or "addiction" and give them offer later.

Overview

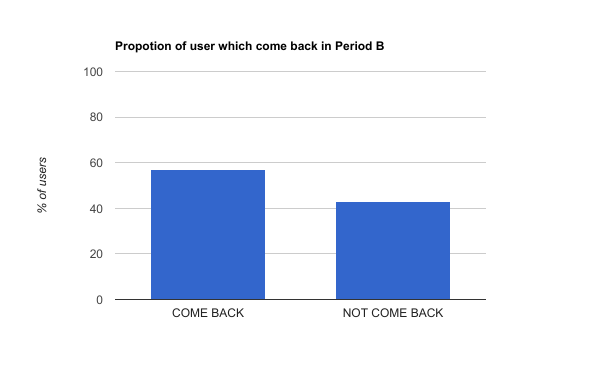

I'll use data of activity of user from period(in this experiment month) A and we want to predict if user will have any interaction in following period B. Between period A and B are some days because we want to track "long-term" loyality no "from-day-to-day" loyality.

Preparing of data

In the process of preparing data are important to take in consideration 2 factors:

- Understanding of data we have and problem we want solve

- Type of data which used algorithm need

As a target value I will use number of pageviews(PV) in period B.

For describing users I'm gonna use following data from period A(all data are in binar value (1/0) because that's format of data which LCS need, except number of PV in period A which I will use in different way). All selected variables has background in data - for example we want track if people from different cities/countries has different properties, we check also how many days there is between first and last PV in period A, if they visit particular sections etc. :

- jan_visits - only non-binary feature - number of PV in period A

- stayMoreThan7 - if the difference between first and last PV was more than 7 days in period A

- stayMoreThan14 - if the difference between first and last PV was more than 14 days in period A

- stayMoreThan21 - if the difference between first and last PV was more than 21 days in period A

- stayMoreThan28 - if the difference between first and last PV was more than 28 days in period A

- fromMobile - if user is from mobile or tablet device

- fromDesktop - if user is from desktop device

- HadDirectVisits - if user visit website direct at least once (write url in browser)

- HadSocialVisits - if user come from social website like FB, Twitter etc at least one

- HadExternalVisits - if user come from different source at least one

- HadSearchVisits - if user come from search engine at least one

- isIOSuser - if user is iOS user

- visitSection13 - of user visit at least 13th most popular section of website

- visitSection12 - of user visit at least 12th most popular section of website

- visitSection11- of user visit at least 11th most popular section of website

- visitSection10 - of user visit at least 10th most popular section of website

- visitSection9 - of user visit at least 9th most popular section of website

- visitSection8 - of user visit at least 8th most popular section of website

- visitSection7 - of user visit at least 7th most popular section of website

- visitSection6 - of user visit at least 6th most popular section of website

- visitSection5 - of user visit at least 5th most popular section of website

- visitSection4 - of user visit at least 4th most popular section of website

- visitSection3 - of user visit at least 3th most popular section of website

- visitSection2 - of user visit at least 2th most popular section of website

- visitSection1- of user visit at least 1th most popular section of website

- fromUK - if user is from UK

- fromBrazil - if user is from Brazil

- fromUSA - if user is from USA

- fromPortugal - if user is from Portugal

- fromOther - if user is not from Portugal or US or Brazil or UK (most user are from those countries)

- isAdblockUser - if user is using adbocker

- fromLisbao - if user is from Lisbao city

- fromBraga - if user is from Braga city

- fromCoimbra if user is from Coimbra city

- feb_visits - TARGET VALUE - HOW MANY PV HAS GIVEN USER IN FEBUARY

LCS

I'm going to try solve this problem with LCS, more precisely with XCS version of LCS. I don't want to go deep into topic about LCS here so I'm providing bunch of links which help in uderstanding of that:

- https://www.youtube.com/watch?v=CRge_cZ2cJc&t=619s

- http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.35.3146&rep=rep1&type=pdf

- https://pythonhosted.org/xcs/

Basicaly result of LCS is set of rule which define value in given column(# means doesn't matter that value).

For example rule "1##################1#######0##1###" in my data means "NOT FROM USA AND IS FROM LISBAO CITY AND IS iOS USER AND HAS DIFF BETWEEN 1. AND LAST DAYS AT LEAST 14 DAYS - OTHER VALUE DOESN'T MATTER"

For every rule from rule population we have:

- action - in our case 1/0 if user will come back or not

- fittnes - fittnes of given rule in

- experience -how old is rule in learning process

process of learning is doing by creation of new rules and test it, mutate them etc(check mentioned sources above) - the important aspect of learning is rewarding for given rules - it's doing by value FEB_VISIT - so if rule which are meet has lot of PV in period B, it will be stronger.

After all, we have set of rules and we will predict for every user 1 or 0:

- predict 1 - if MORE ACTION OF RULES ARE SAYING 1(vote are multiply by it's fittnes)

- predict 0 - if MORE ACTION OF RULES ARE SAYING 0(vote are multiply by it's fittnes)

Actually, it's kind of ensemble learning.

Data & Results

As I mentioned only not-binary variable is 'number of PV in period A'. I will use it in following way:

- FILTER USER WITH AT LEAST X PV(X > PV IN PERIOD A)

- DIVIDE TO TRAIN & TEST DATA

- RUN LCS ON TRAIN DATA

- RESULT FOR TEST & TRAIN DATA WITH Y BEST(BY FITNESS) LCS RULES

I set after experiments and trade off with time Y(number of used rules) to 100

1, for x > 3:

test data => TRUE POSITIVE(TP) = 1820, FALSE POSITIVE(FP) = 886, TRUE NEGATIVE(TN) = 622, FALSE NEGATIVE (FN) = 854 => Precision is 0.6726 train data => TRUE POSITIVE(TP) = 7480, FALSE POSITIVE(FP) = 3308, TRUE NEGATIVE(TN) = 2708, FALSE NEGATIVE (FN) = 3360

2, for x > 5:

test data => TRUE POSITIVE(TP) = 850, FALSE POSITIVE(FP) =376 , TRUE NEGATIVE(TN) = 233, FALSE NEGATIVE (FN) = 261 => Precision 0.6933

train data => TRUE POSITIVE(TP) = 3412, FALSE POSITIVE(FP) = 1505, TRUE NEGATIVE(TN) = 970, FALSE NEGATIVE (FN) = 958

1, for x > 7:

test data => TRUE POSITIVE(TP) = 684, FALSE POSITIVE(FP) = 569, TRUE NEGATIVE(TN) = 148, FALSE NEGATIVE (FN) = 84 => Precision 0.5459 train data => TRUE POSITIVE(TP) = 2638, FALSE POSITIVE(FP) = 2205, TRUE NEGATIVE(TN) = 664, FALSE NEGATIVE (FN) = 443

Best precision for this experiment set, almost70 %, is to have at least 5 PV and I'm providing also 15 most improtant rule for that:

- 111#100###00#0000##1##1###01#01#00

- 00000110#00##00####0#0#0#0#0100#00

- 1#####00#00#0###111#111000####100

- 0#####0###0#000#0#01####00#1001#0#

- #0000###00##00000#1##1##00##0##000

- 1110###10#0#0#000011#1#10##10##000

- 1#1###1##0000##010#1#1##0001#00#00

- #110#110000##00010#011###001000000

- 1100##1#0###00#0###0#1110#0100#0##

- 1110###10000#0#00###0#110#01##1000

- 11###011#0#0#000#####1#100#1001000

- #000#0#0#0000####0#00#1001#0000##0

- 1#101001####00000010001100#1#000##

- 1100###1##0#0000##0#1#1##0001##0#0

- #00#1#00##0#0#000##00#0#0##0000#00

- 11#01#1#0#0##0#00###1011#00100#00#

- #0#00##00#0##000#00#10##00010##000

Code

CODE HERE

Conclusion

LCS provide interesting approach to solving machine learning problem. The main advantage of this approach is extremely straightforward representation of rules from rule population on comparison of often used neural network or random forrest.